Today’s interactive screens may seem like magic, but as Lee Constable explains, the tech is decades old and based on surprisingly simple principles.

Discuss the application of Physics and Chemistry understanding in a real-life context that students will definitely be familiar with. This technology has evolved rapidly over the last 50 years, creating new jobs and changing how lives are lived. It opens up the discussion with year 6, 8, 9, and 10 students about new applications of scientific knowledge and future STEM careers that may not exist yet.

Word Count: 1573

Let’s be honest with ourselves: we were already on phones far too often before COVID-19 swept into our lives. We’re constantly clicking and scrolling, zooming and swiping with our fingertips in a way that has become completely automatic as we seek information entertainment and connection. Reading and note-taking on phones have become so second nature that I’ve caught myself more than once attempting to scroll down a physical paper page by running my finger along it.

Hard as it is to believe, there was once a pre-touchscreen world. And although Gen Z, who have had no experience of that world, can be forgiven for imagining dinosaurs may have roamed the Earth at the time of the first touchscreen, feebly swatting at it with their tiny T. rex arms, the rest of us (yes, even millennials like me) can dimly recall a time when swiping across a screen was something you only did to clean it.

Touchscreen tech has brought with it a whole new vocabulary – apps, swiping, pinch-to-zoom – and new gestures and behaviours, as we manipulate virtual objects with unremarked-upon ease. So right now, as you put your phone aside to engage with entertainment and information through the millennia-spanning medium of ink on paper, allow me to remind you of the excitement as we take a journey through time and the evolution of touchscreen tech.

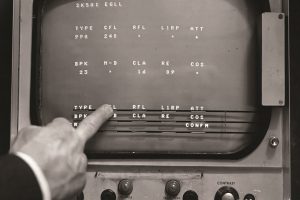

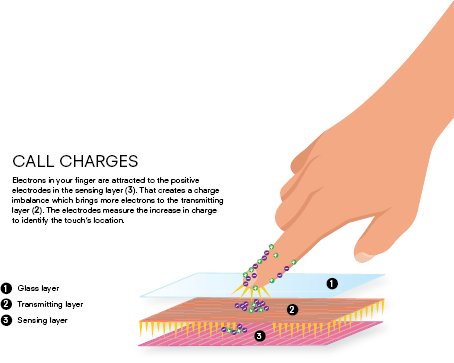

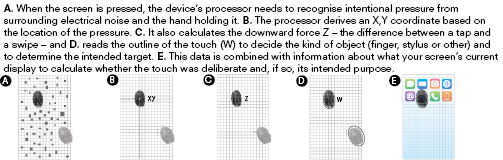

The most commonly used touchscreens today – capacitive screens – are almost as old as Baby Boomers: invented in 1966 for air traffic control radar screens at the UK’s Royal Radar Establishment. This first iteration worked by running a small current through a transparent sheet of material across the screen, which created a static field. The interference of a fingertip pushing on the screen was all it took to charge the plate below, changing the electric charge – capacitance – at the screen’s corners. Just as each key on a keyboard completes a corresponding circuit when your finger presses down, these early screens relied on the pressure of a finger causing the two layers of material to come into contact with each other, completing a circuit at the point of contact.

As this circuit could be completed with a touch at any location across the screen, the next challenge this early tech overcame was locating that point of contact. The electrical charge at each corner of the screen would vary, depending on the proximity of the circuit closure – so the central computer measured the difference in the charge at each corner of the screen and then calculate the location of the touch.

The interference of a fingertip pushing on the screen was all it took to charge the plate below.

I can only begin to imagine the excitement that air traffic controllers must have felt when the first radar touchscreen was installed in their workplace. I suspect that excitement may have turned to frustration, however, when they discovered its shortcomings.

In 1977 this clunky design was improved upon by the resistive screen. These are the screens that found their way into the interfaces of ATMs. Some may remember mashing their fingertips firmly against these cash-machine screens to make a selection, and while they may have required a bit more pressure than we’re accustomed to now, they were more accurate than their predecessor, which was still being used in radar screens (much to the continued annoyance, I assume, of their users).

A company called Elographics – which is still creating screens today under the name Elo Touch – achieved this feat by incorporating not one but two sheets layered on top of each other, hence the extra pushing needed. Conductive lines were etched into each sheet, horizontally on one and vertically on the other, to create a grid. Each line gave a unique voltage, and as your fingertip prodded the word “Withdrawal” it would also cause the two sheets to press together and their horizontal and vertical lines to touch at the point of impact. The resulting voltage told the central computer which two lines had touched – a kind of digital Battleship game, more mathematical than magical in reality.

In the 1990s, as mobile phone technology advanced, touchscreen tech also found its way into hand-held devices. In 1993, Apple introduced the Newton, a touchscreen device incorporating a calculator, address book, notebook and calendar all in one. A few years later a young me was enthusiastically stabbing away at the resistive (a little too resistant to the prod of my tiny fingers perhaps?) screen of a school computer and excitedly showing my awe-inspired (or at least humouring me by pretending to be so) parents the wonders of this miraculous technology.

The Newton and other devices like it would eventually merge with the increasingly more compact mobile phone. The IBM Simon Personal Communicator, released in the mid ’90s, was a touchscreen device incorporating features similar to that of the Newton but – amazingly – including the capability to text and call. The device is often retrospectively called the first smartphone (although the term was coined much later), but with its chunky dimensions and half-kilogram weight it could also be dubbed the first technological brick.

This might explain why so many of us will remember with fondness our Nokia 3315s and subsequent models of near-indestructible mobile phone. Although they allowed for texts, calls and iconic games like Snake, touchscreen was still a few years off for the fingertips of 2000s teens on a budget (me). Instead, the first adopters of the highly expensive pocket-sized smartphone technology were business executives, poking at their Blackberries and similar devices with a pen-like stylus as they responded to urgent emails in the back of taxis, or (tsk tsk) at home in their beds or on their couches.

Fast forward to 2007, when Steve Jobs changed the future of phones by introducing the iPhone to a live audience. It was a touchscreen device that allowed, for the first time, multiple touchpoints to be detected and responded to simultaneously.

In fact, less than a month earlier, the LG Prada had been announced as the very first touchscreen mobile phone – but the multi-touch capability of the iPhone eclipsed any gadget that came before it. From the stage, Jobs demonstrated to audible gasps of amazement something that has become second nature to toddlers as they reach for screens on their parents’ laps: the two-finger pinch-to-zoom, which I admit I have also absent-mindedly attempted to do on computer screens, resulting only in smudged screens and disappointment.

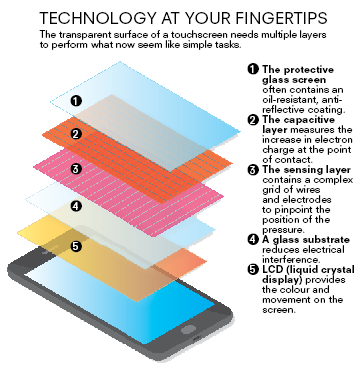

Although to many the iPhone seemed like it emerged from nowhere, its revolutionary screen actually had its basis in the previous century. iPhone screens – and most other smartphone screens today – are a return to the capacitive principles of that original air traffic control screen, but with a new take on the grid design of early ATM screens.

I wonder how long it will be until we can scrunch up our phones and stuff them into our back pockets.

As Jobs’ thumb and index finger made contact with the iPhone’s screen to execute the world’s first public pinch-to-zoom, this is what was unseen to the excited onlookers. The surface of the phone was covered in conductive material with an invisible interlocking diamond-shaped matrix mapped out across it.

Instead of sensing the change in capacitance at the corners of an entire screen, each tiny diamond in this charge-sensing matrix is able to act independently, like many four to eight-millimetre versions of the old radar screen. The diamonds register alterations in charge at the coordinates of thumb and forefinger at the same time and then track their movement across the matrix, signalling the changes in the underlying LCD display as the zoom command is deciphered.

Throughout the late 2000s and 2010s, as the sensitivity and resolution of touchscreens has improved, movements like the pinch-to-zoom have become intuitive, and we use smartphones that have similar or identical conventions regardless of brand, allowing us to treat the display on the screen and even our app icons as physical objects that respond to our touch by behaving in what have become predictable ways.

Despite a deluge of upgrades and technological advances, the only major screen change was introduced with iPhone 5 in 2012, incorporating the multitouch- sensing capacitive layer into the LCD display to make the phone thinner, lighter and cheaper to produce.

So what could possibly come next in this evolutionary tale? Despite the introduction of gorilla glass – which promises hardier, smash-resistant screens that are still touch-responsive – almost all of us have experienced the grief of a cracked screen. Flexible, they are not.

However, Samsung and Motorola, among others have recently been trialling a new screen to roll out, almost literally.

The foldable touchscreen is already here, and I wonder how long it will be until we can just scrunch up our smartphones and stuff them into our back pockets like a hastily received receipt or forgotten tissue. I can only hope that advance also comes with all-encompassing waterproofness, given how often that story ends in the laundry.

Despite doing much of the research for this article by touch in self-isolation, lying on my couch with a smartphone screen beaming a glow of information into my face as my fingers prodded, pressed and swiped, I have to admit my drafting process continues to run far more smoothly if I pick up a pen and apply it to paper. And while the paper doesn’t respond to my touch in the way my phone screen does (despite my best instinctive attempts), the touch of pen on paper does something to my central computer – my brain – that no screen seems to match.

This article was written by Lee Constable, science communicator, television presenter, children’s author and biologist, for Cosmos Magazine Issue 87.

Cosmos magazine is Australia’s only dedicated print science publication. Subscribe here to get your quarterly fill of the best Science of Everything, from the chemistry of fireworks to cutting-edge Australian innovation.

Login or Sign up for FREE to download the educational resources