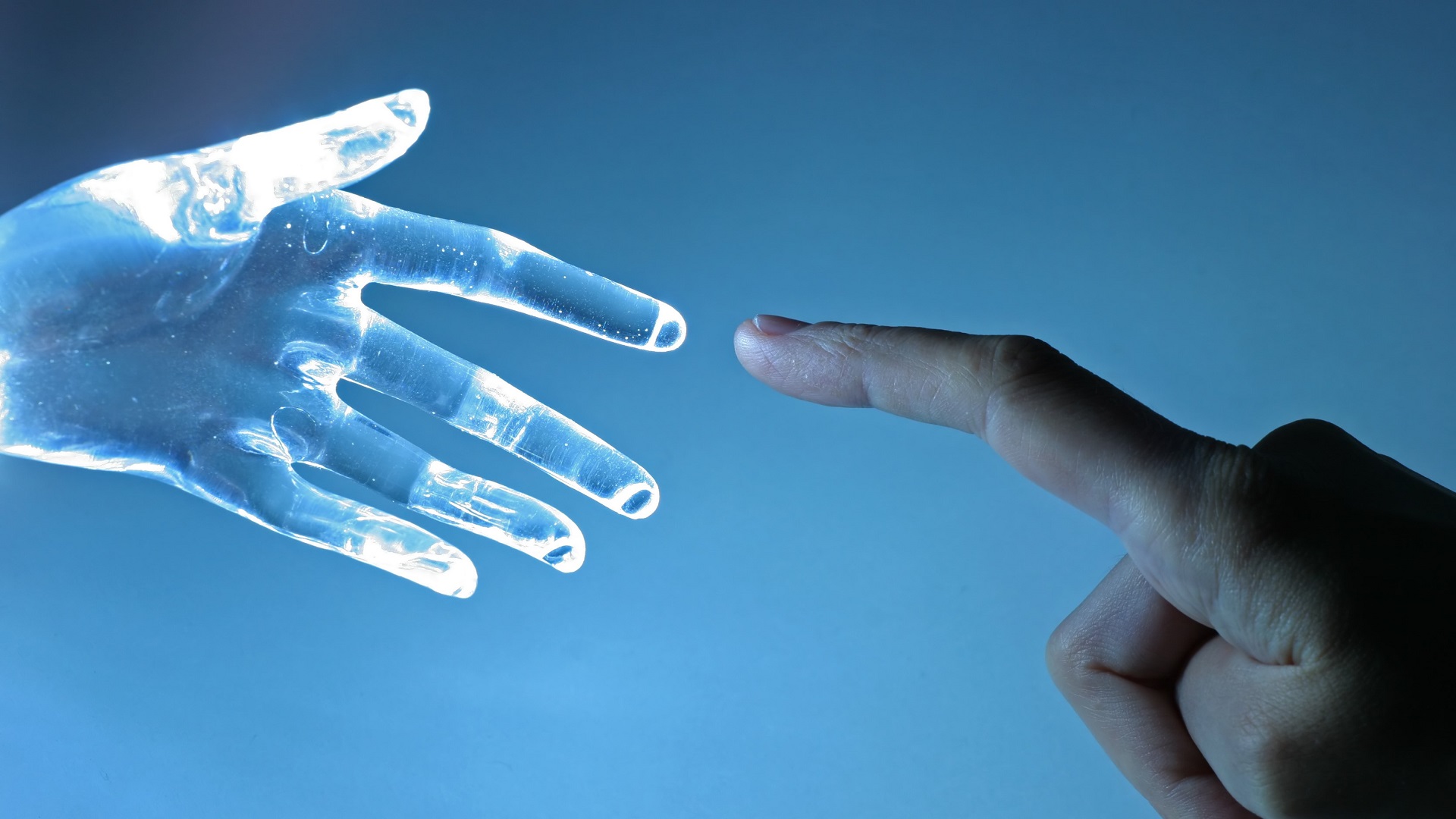

The artificial intelligence future is inevitable, but what it looks like is up to human decisions – we need to make sure we get it right before we roll it out on a larger scale.

Discuss the ethics of upcoming AI technology and use both articles to investigate alternative careers in STEM, such as those working in law, design and manufacture. These articles are best suited to students in Years 6 and above.

Word Count: 901 and 911

Why This Matters: It’s up to us to determine what the future of AI looks like.

From our phones to our toasters, the technology that surrounds us is increasingly turning ‘smart’ with artificial intelligence.

By 2023, just three years away, the global spending on artificial intelligence systems is estimated to more than double, hitting almost $98 billion. And with the revolution comes concern about just how much of a role it will play in our lives

According to Peter Eklund, a Professor in Artificial Intelligence and Machine Learning from the Deakin University’s School of Information Technology, we already rely on AI, like GPS and home devices through the Internet of Things.

“We already see a flavour of AI with Siri and Google Home and we’ll see more and more of these Internet of Things devices and we’ll need to be able to control and interact with them,” he says.

“Automatic Speech recognition in Google Home, Siri and Alexa was the frontier of AI just a short while ago, but now it’s commoditised and we take it for granted.”

The artificial intelligence future in reality

AI has the potential to automate routine and tedious tasks that would make any human shudder. Think data entry and compiling massive amounts of information into organised lists.

“We’ll begin to see automated algorithms in the workplace. Algorithms will start to determine the optimal sequence of tasks we perform, particularly in white-collar jobs. We’ve seen this already to a certain degree,” Eklund says.

“Imagine I’m a school principal and I want to get a complete list of all the students in the school sorted by their age but I only have individual spreadsheets as class lists. You could imagine asking an AI to merge and sort these files, because that would be a really tedious task to do by hand, and if AI can provide those kinds of solutions then it’s a really big efficiency boost.”

A common argument against AI is that it will replace human jobs. However, Eklund says that won’t always be the case.

“AI will never renovate your bathroom, and I’m yet to be convinced it can entertain people, I seriously doubt AI will be able to drive the weekly bin collection trucks for instance,” he says.

Instead, he suggests that AI will contribute to low-skilled job industries in the way of safety cams, visual warnings and alerts via augmented reality.

“I don’t see, particularly in the physical workplace, workers being replaced by AI and robotics.”

Robodebt – when automation goes bad

Although AI sounds very nice and efficient, as with any new technology, there have been instances of it going badly wrong.

Cast your mind back to 2016 when the Centrelink Robodebt scandal made headlines nationally.

In that situation, the Federal Government-operated social services provider Centrelink used a computer program to gather data from other government agencies, like the Taxation Office, and then compared it with what people reported to Centrelink. The program was designed to quickly check whether there are discrepancies in what you reported to Centrelink and what an employer reported.

Those who were found to have a discrepancy were notified and instructed to check and explain the discrepancies.

If the discrepancy wasn’t explained, it was assumed to be an overpayment and a debt was issued.

The problem with the program was, more than two-thirds of the notices given needed to be re-assessed because they weren’t accurate and in many cases completely incorrect.

That led to people – even those in the process of contesting the debt – having to go onto a payment plan for a debt they didn’t owe, and caused serious distress.

“This is what happens when you get it wrong – the algorithm was wrong, and the scalability of the automation compounds the error to geometrically, way out of proportion to what could have ever happened if this had been processed manually,” Eklund says.

“The result is a legal class action. The government looks really mean. The department looks incompetent and the public loses faith in everyone.”

What stands in the way of AI?

Mistakes aside, AI continues to find its way into our daily lives.

This month alone, supermarket giant Woolworths announced a trial using AI to scan and automatically detect fruit and vegetable purchases.

In a fast-paced world, where efficiency and automation are all the rage, it’s easy to get swept away in the utopian wave of AI, but Eklund suggests that it’s important for us to temper our ambitions, especially before we roll them out on a larger scale.

“Somebody at Centrelink should have said ‘let’s slow down a little here. Maybe we’ll try this for 50,000 cases and see if we get it right’, then they should have rolled it out in a much larger way once it’s been fully tested.”

“There are so many lessons in shows like Black Mirror about a dystopian view of the AI-enabled near future. It sends a real warning to us about what we definitely don’t want from AI.”

According to Eklund, when it comes down to it, the only thing standing in the way of AI being successful is us.

“I think people stand in the way, because ultimately the algorithms are being written people.”

And despite the power of the technology, at the end of the day it’s people who will ultimately determine what the AI future looks like. Balancing those decisions will make sure we get it right.

How humans are teaching AI to become better at second-guessing

Why This Matters: Properly designed, artificial intelligence could assist humans not as tools, but as partners.

One of the holy grails in the development of artificial intelligence (AI) is giving machines the ability to predict intent when interacting with humans.

We humans do it all the time and without even being aware of it: we observe, we listen, we use our past experience to reason about what someone is doing, why they are doing it to come up with a prediction about what they will do next.

At the moment, AI may do a plausible job at detecting the intent of another person (in other words, after the fact). Or it may even have a list of predefined, possible responses that a human will respond to within a given situation. But when an AI system or machine only has a few clues or partial observations to go on, its responses can sometimes be a little…robotic.

Humans and AI systems

Lina Yao from UNSW Sydney is leading a project to get AI systems and human-machine interfaces up to speed with the finer nuances of human behaviour.

She says the ultimate goal is for her research to be used in autonomous AI systems, robots and even cyborgs, but the first step is focused on the interface between humans and intelligent machines.

“What we’re doing in these early phases is to help machines learn to act like humans based on our daily interactions and the actions that are influenced by our own judgment and expectations – so that they can be better placed to predict our intentions,” she says.

“In turn, this may even lead to new actions and decisions of our own, so that we establish a cooperative relationship.”

Yao would like to see awareness of less obvious examples of human behaviour integrated into AI systems to improve intent prediction.

Things like gestures, eye movement, posture, facial expression and even micro-expressions – the tell-tale physical signs when someone reacts emotionally to a stimulus but tries to keep it hidden.

This is a tall order, as humans themselves are not infallible when trying to predict the intention of another person.

“Sometimes people may take some actions that deviate from their own regular habits, which may have been triggered by the external environment or the influence of another person’s actions,” she says.

All the right moves

Nevertheless, making AI systems and machines more finely tuned to the ways that humans initiate an action is a good start. To that end, Yao and her team are developing a prototype human-machine interface system designed to capture the intent behind human movement.

“We can learn and predict what a human would like to do when they’re wearing an EEG [electroencephalogram] device,” Yao says.

“While wearing one of these devices, whenever the person makes a movement, their brainwaves are collected which we can then analyse.

“Later we can ask people to think about moving with a particular action – such as raising their right arm. So not actually raising the arm, but thinking about it, and we can then collect the associated brain waves.”

Yao says recording this data has the potential to help people unable to move or communicate freely due to disability or illness. Brain waves recorded with an EEG device could be analysed and used to move machinery such as a wheelchair, or even to communicate a request for assistance.

“Someone in an intensive care unit may not have the ability to communicate, but if they were wearing an EEG device, the pattern in their brainwaves could be interpreted to say they were in pain or wanted to sit up, for example,” Yao says.

“So an intent to move or act that was not physically possible, or not able to be expressed, could be understood by an observer thanks to this human-machine interaction. The technology is already there to achieve this, it’s more a matter of putting all the working parts together. ”

AI systems and humans could be partners for life

Yao says the ultimate goal in developing AI systems and machines that assist humans is for them to be seen not merely as tools, but as partners.

“What we are doing is trying to develop some good algorithms that can be deployed in situations that require decision making,” she says.

“For example, in a rescue situation, an AI system can be used to help rescuers take the optimal strategy to locate a person or people more precisely.”

“Such a system can use localisation algorithms that use GPS locations and other data to pinpoint people, as well as assessing the window of time needed to get to someone, and making recommendations on the best course of action.”

“Ultimately a human would make the final call, but the important thing is that AI is a valuable collaborator in such a dynamic environment. This sort of technology is already being used today.”

But while working with humans in partnership is one thing; working completely independently of them is a long way down the track. Yao says autonomous AI systems and machines may one day look at us as belonging to one of three categories after observing our behaviour: peer, bystander or competitor. While this may seem cold and aloof, Yao says these categories may dynamically change from one to another according to their evolving contexts. And at any rate, she says, this sort of cognitive categorisation is actually very human.

“When you think about it, we are constantly making these same judgments about the people around us every day,” she says.

Login or Sign up for FREE to download a copy of the full teacher resource